(If you’d rather listen to this post than read it, just click play above.)

Welcome back to Volts, where every week is Transmission Week!

In my three transmission posts so far, I have focused mostly on the challenges of building new long-distance energy transmission lines in the US — the poor planning, the inefficient financing, the permitting and siting hassles.

Today I’m going to turn to a different subject: the various ways that the performance of the existing transmission system could be upgraded and improved through so-called “grid-enhancing technologies” (GETs).

To be honest, I probably should have tackled this subject first. Though new lines are going to be needed regardless, it is faster and cheaper to upgrade the existing system, with fewer regulatory barriers. GETs can achieve short-term relief from grid congestion while new lines are being developed.

There are three techs that are typically classified as grid-enhancing technologies, and I will focus on them in this post. In my next post, I’ll cover a couple of extra options that I haven’t found any other way to fit in.

Let’s jump in.

(I should note here up top that I will be drawing heavily from a 2019 report on GETs from the Brattle Group and Grid Strategies.)

Closer monitoring to improve line performance

When electricity passes through transmission lines, they heat up. As they heat up, they sag. If too much electricity is run through a line, it can exceed its maximum operating temperature or sag to the point that it brushes up against trees or other structures, potentially sparking fires.

Grid operators want to avoid that, so they do not load lines to their full rated capacity. They set an operational limit well below theoretical capacity, to create a safety margin.

But how far below capacity should the limit be set? That is the question.

The heat and sag of a given line are changing in subtle ways all the time. They vary with the ambient temperature, humidity, barometric pressure, and wind speed. If it’s warmer, the line will heat up faster; if there’s a breeze, it will heat more slowly. Because the heat and sag are in constant flux, so too is the maximum safe capacity of the line.

“The number we love to quote is, an increase in wind blowing across a power line of three feet per second results in a 44 percent increase in the capacity of that power line,” says Jonathan Marmillo, co-founder of LineVision, a company that makes equipment for monitoring lines. “That's the equivalent of a light breeze.”

(Note: this means that the capacity of transmission lines increases as the production of wind energy increases. Handy!)

But transmission system operators do not generally have that kind of real-time information about the heat and sag of their lines. They are forced to estimate, to use an average. In some cases, they assign a line a single “static rating,” well below full capacity. In some cases, they assign the lines seasonal ratings, adjusting for seasonal conditions. These estimates are, necessarily, conservative.

As a result, “most transmission lines are loaded at 40 or even 30 percent of their rated capacity,” says Marmillo. That’s an enormous amount of usable capacity going unused, to hedge against the lack of information.

That has changed with the development of “dynamic line ratings” (DLRs), whereby lines are continuously monitored and their capacity continuously updated.

DLRs have been around for a couple of decades, but the first generations of devices were cumbersome. They were installed directly on the power lines (which involved taking the lines out of commission) and proved unreliable in operation.

Technology marches on, though, and the latest generation of DLRs is vastly improved. LineVision’s DLR devices, for instance, have “no-contact” installation, which means no messing with the lines; they attach to the transmission tower. They are topped with LIDAR — the same technology used by autonomous vehicles — which gathers fine-grained data that is then crunched to determine the “net effective perpendicular windspeed,” the most important variable for determining line temperature. “We essentially use the conductor as a giant hot wire anemometer,” says Marmillo.

Of course, if you abandon averages in favor of real-time measurement, sometimes capacity will be below what the static average would have indicated. But “we see capacity above static [ratings] about 97 percent of the time,” says Marmillo. It turns out those static ratings are extremely conservative.

Allowing more power to travel through lines relieves grid congestion, which is valuable to grid operators. Marmillo says a recent installation of LineVision’s device on a PJM line paid itself back in three months.

DLRs are particularly cheap if you compare them to more dramatic solutions to grid congestion. “The cost of deploying a DLR system on a transmission line,” says Marmillo, “is less than 5 percent the unit cost of reconstructing or rebuilding the line.”

(Note: there’s an open FERC proposal on the subject of line ratings, in which the commission plans to require seasonal line ratings within two years, and for RTOs and ISOs to put in place systems that are prepared for DLRs within a year.)

So that’s technology one: DLRs to better understand and exploit the real-time capacity of existing lines.

Controlling the flow of electricity to ease congestion

The Brattle report says: “Power flow through an AC line is proportional to the sine of the difference in the phase angle of the voltage between the transmitting end and the receiving end of the line,” and I’ll just go ahead and trust them on that.

Left uncontrolled, power will simply cascade through the system according to Kirchhoff's laws. But it is useful for grid operators to be able to route power away from congested areas and toward less congested areas. To do that, they need flow control devices.

The first kind are special transformers called “Phase Angle Regulators” (PARs) that directly manipulate the phase angle to control the flow of power. They are well-known and accepted in the industry, but they are expensive, to the tune of millions of dollars a year, which has limited their deployment to a select few high-traffic lines. Plus, the accelerating pace of change in the electricity system has made their size and inflexibility more problematic. “These are 40-plus-year fixed assets,” says Jenny Erwin, marketing director for Smart Wires Inc., a company that makes flow control devices, and these days, “it's just much harder to plan out what you need 40 years from now.”

The other family of flow control devices are Flexible Alternating Current Transmission Systems (FACTS), which are generally power-electronics devices that control the flow of power through a line or voltage on a system by, for example, increasing or decreasing reactance on a line.

Older versions of FACTS were also quite large and expensive, but due to advances in electronics and control software, they have been made much smaller and more modular. “What used to be done with copper and steel,” Erwin says, “we are able to do with silicon and software.”

Now, reports Brattle, FACTS “typically cost significantly less than PARs, can be manufactured and installed in a shorter time, are scalable, and in many cases, are available in mobile form that can be easily redeployed.” (Smart Wires, a California company that’s been around since 2010, is currently the only company making these modular FACTS.)

Several studies have found that FACTS create value by easing grid congestion and deferring transmission system investments. For example, Brattle summarizes the results of a 2018 study from the Electric Power Research Institute (EPRI): “simulating the 2016 PJM system with 13 power flow control devices placed in optimal locations to reduce thermal overloads indicated annual production cost savings of $67 million. Considering the initial investment cost of $137 million, the payback period is roughly 2 years.”

The possibilities opened up by modular FACTS have only just begun being explored. Most deployments and studies have focused on individual lines, but as more and more lines become dispatchable, it stands to reason that there will be emergent system effects. It’s one thing to have a dispatchable line; it’s another to have a dispatchable grid.

Erwin acknowledges that this is, in fact, Smart Wires’ long-term vision. “We like to think about it as crawl, walk, and run,” she says, and implementing a fully dispatchable grid “would be running.” The company is taking small steps in that direction in the UK, installing FACTS on several lines across a wide swath of territory and linking them up so that they communicate with one another.

But she stresses that the comprehensive vision “is not required to unlock value. You can extract meaningful value today, because every new FACTS adds a degree of control and efficiency.” For much more on this, see this technical report from EPRI and many other reports compiled by Smart Wires.

So that’s technology two: power electronics to control the flow of power across the grid.

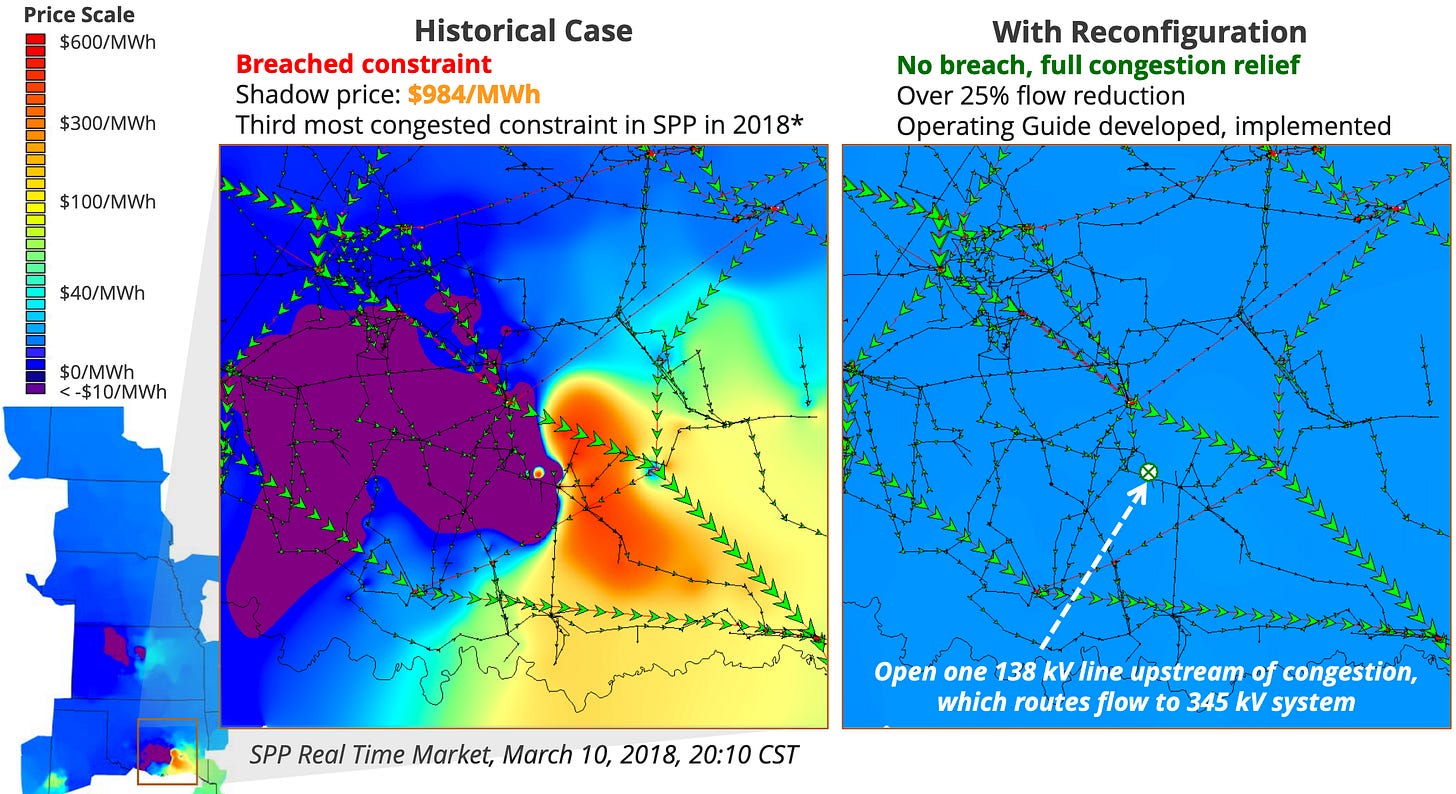

Reconfiguring the grid to route around congestion

The flow of energy through an electricity system is determined by the level of output of the generators, the level of consumption of the loads, and the “topology” (physical configuration) of the transmission lines connecting them.

There is already hardware deployed across the grid, in the form of circuit breakers and communications systems, that can, by switching open or closed, change that topology. Grid operators have long had switching procedures in place to reconfigure the grid as necessary to maintain reliability.

But “finding good reconfigurations is computationally challenging,” says electrical engineer Pablo Ruiz, a consultant at Brattle, associate research professor at Boston University, and co-founder of NewGrid, Inc., a grid software company spun off from an ARPA-E project. Traditionally, reconfigurations have been implemented on a limited, ad hoc basis, guided by operator experience.

Recently, however, engineers have learned to calculate reconfigurations more quickly using software. Thus the budding field of “topology optimization.”

Ruiz draws an analogy with transportation. The old way of handling congestion was to raise tolls on the main roads, convincing drivers to stay home (or in the case of power, generators to curtail their output). Topology control software, Ruiz says, is like the navigation app Waze, showing drivers how they can route around congestion. That will mean less curtailment and less congestion.

The software doesn’t do the reconfiguring itself — that’s still for the grid operator. “The analogy with Waze is actually pretty accurate,” Ruiz says. “It's a decision-support tool. This is not about self-driving cars; the operator is still the driver.”

Naturally, though, it makes me wonder about the possibility of self-driving grids — grids that route power optimally and automatically. Ruiz thinks something like that will eventually happen, but expects a long road of incremental advances in automation before then.

Anyway, in the meantime, recent deployments of topology control software in the UK have shown that “just by optimizing the configuration of the grid, you can increase grid capacity by, depending on system conditions, between 4 and 12 percent,” Ruiz says. “These are very large transfers, so if you can get 10 percent more with existing infrastructure, without any new capital investment, that's a big deal.”

Studies by Brattle in the US and National Grid in the UK have confirmed that topology optimization can relieve transmission constraints and save power consumers tens of millions of dollars annually. “Broad application of the technology for real-time and day-ahead congestion management support would reduce the cost of congestion by about 50 percent,” he says.

Even with the misaligned incentives of today’s utilities (software investments, unlike infrastructure investments, do not receive a guaranteed rate of return), Ruiz thinks topology control will pencil out for them. It might reduce the need for some smaller transmission-expansion projects, but it will relieve congestion on lower capacity lines by routing power to (currently underutilized) high-capacity lines — thus improving the economics of those larger, more expensive projects.

Topology control will also improve the business case for a national macrogrid, since it can help ensure that every high-voltage trunk line is fully utilized.

So that’s technology three: software to map out the best and most efficient configuration of the grid, from day to day and hour to hour.

The extensive benefits of GETs

The Brattle report I mentioned at the top of the post recounts several examples of successful deployments of GETs. It estimates that wide deployment would produce benefits that rival the value of creating regional transmission organizations (RTOs) and competitive power markets. The benefits of GETs include not only relieving grid congestion, deferring new capital investments, and saving ratepayers money, but also boosting reliability and resilience and generally improving system performance.

The most interesting attempt to assess the full benefits of GETs comes in a forthcoming report prepared by Brattle for the WATT (Working for Advanced Transmission Technologies) Coalition, a group of companies developing GETs.

The study won’t be released until February 24, and unfortunately, the folks at the WATT Coalition are too short-sighted to allow me to share the results in advance (grumble).

I can say, though, that it is a detailed engineering analysis focused on a single (wind-rich, increasingly congested) transmission region. It examines the effects of a full deployment of GETs across the region.

Long story short, GETs double the amount of new renewables the regional grid is able to accommodate through 2025. Building enough new power lines to do that would be wildly expensive and take decades; GETs do it almost immediately, with an investment that pays itself back in about six months. It also creates jobs, reduces carbon emissions, and saves the region money.

In terms of the clean-energy transition, GETs are an easy win, a quick way to bring more renewables online and reduce emissions while also, helpfully, saving money. Utilities just need to do it.

Making utilities want to get GETs

The core problem for GETs is the same problem I have been identifying for years: the incentive system in which US energy utilities operate. They do not make money by selling electricity or by providing superior service. They make money by receiving a guaranteed rate of return on capital investments.

Naturally, they want to make more capital investments.

If a technology comes along — energy efficiency, distributed energy resources (DERs), or GETs — that promises to defer or even head off the need to make new capital investments, the utility’s profits are directly threatened. All those technologies may serve the public’s social, economic, and environmental goals, but they do not serve the utilities’ financial interests.

Even when utilities do not face a disincentive to improve their operational performance, they have no positive incentive, no reason to set aside money and resources. The costs of congestion and interconnection backups are simply passed along to ratepayers.

Everyone in clean energy is aware of this basic incentives mismatch. “It's really an incentives issue,” says Erwin. “Clearly there is a misalignment in incentives,” says Ruiz. “There's no question about it.”

Consequently, deployment of GETs remains confined to a few demonstration projects. Brattle summarizes:

The slow pace of adoption of these new technology options may largely be driven by two factors. First, the technology options by themselves are not being recognized enough for their capabilities. … Second, there is insufficient incentive for either the transmission operators or owners—the two market players who are best suited to adopt these technologies—to innovate and change their operations, which requires a concerted effort.

Reforming US utilities is a mountainous task, and nobody has time to wait around on it. In the meantime, the best that can be done is to create incentives where they are now lacking.

FERC can do so by mandating that utilities and RTOs examine alternatives to new transmission — upgrades to the existing system — in transmission and operational planning processes. It can implement new rules that reward utilities for meeting performance metrics, so-called “performance-based regulation” (as is common in the UK and Australia). It can encourage benefit-sharing (and cost-sharing) among transmission owners and other market participants, both sharing congestion costs and spreading out the benefits of new GETs. And FERC could push utilities to subject new GETs projects to competitive bidding.

A group of 13 senators recently wrote a letter to FERC asking that it take these steps to encourage GETs.

Congress could help by offering tax credits or other financial incentives to utilities to improve existing transmission systems with GETs. Money is the best incentive of all.

And maybe, some day, we could think about reforming utilities root and branch, to once and for all align their incentives with pro-social behavior. A fella can dream.

GETs are part of the digitization of energy

One of my pet theories — which I first wrote about in 2016 — is that Vaclav Smil is wrong. Smil is a venerable energy analyst famous for throwing cold water on all the talk of a rapid transition to clean energy; he points out that previous energy transitions have taken more like a century than a decade.

One reason I think the clean energy transition will move faster is that it is not merely a transition from one set of physical energy sources to another (though it is that too). It is also in part a transition from the physical to the digital.

Where physical commodities generally get more expensive over time, computing power is consistently getting cheaper and cheaper. In area after area, engineers are figuring out how to substitute “intelligence for stuff” — i.e. computing power for commodities.

Think, for instance, about solar trackers. Solar panel manufacturers used to experiment with a variety of shapes for panels, to try to catch more of the sun’s energy as it passes overhead; that manufacturing is expensive. Now panels can be mounted on trackers that automatically sense and follow the sun — so manufacturers mainly just make flat panels. The intelligence of the trackers has substituted for the stuff of the panels. (See also: digital circuit breakers that allow building owners to get more out of their existing electrical systems.)

(I had to mention digital circuit breakers as an excuse to show this amazing video.)

GETs are another great example. The same physical grid can virtually double its capacity through the combined application of GETs: better sensing, better calculating, and better control. Rather than make twice as much grid, we can make a grid twice as smart. Intelligence for stuff.

Inevitably, as the costs of sensors, chips, and computing power continue to decline, we are going to infuse them into all our infrastructure: transportation, buildings, and power. We are going to get more performance out of our existing capital stock through the application of intelligence.

Once progress is hitched to computing power, the clean energy transition will no longer be limited by the slow innovation and turnover cycles of physical commodities and machines. Things move much more quickly in the digital space.

Consequently, the transmission grid we’ve already got may have much more potential than we’ve given it credit for. But fulfilling that potential will involve pushing utilities to value getting more out of the transmission assets they already own. It’s a fairly easy fix, for a large impact. Biden should get on it.